- Understanding double pruning

- Weight pruning

- Neuron pruning

- Benefits of double pruning

- The Importance of Neural Network Efficiency

- 1. Faster Training and Inference

- 2. Lower Resource Requirements

- 3. Sustainable AI Development

- Conclusion

- Advantages of Sobolev’s approach

- Incorporating Sobolev’s method into pruning

- Initial pruning

- Double pruning

- Improved performance with double pruning

- Implementation and techniques

- 1. Network architecture

- 2. Double pruning technique

- 2.1 Magnitude pruning

- 2.2 Importance pruning

- 3. Training and fine-tuning

- 4. Evaluation and comparison

- Steps to implement Sobolev’s double pruning

- Optimizing neural network efficiency through double pruning

- What is pruning?

- The benefits of double pruning

- Enhancing efficiency through Sobolev criterion

- Conclusion

- Enhancing neural network efficiency using double pruning according to Sobolev’s approach

- Introduction

- The need for network pruning

- The double pruning approach

- First pruning stage

- Recovery and retraining for the first pruned network

- Second pruning stage

- Performance evaluation

- Conclusion

- Question-answer:

- What is double pruning according to Sobolev?

- How does double pruning according to Sobolev enhance neural network efficiency?

- What are the benefits of double pruning according to Sobolev?

- How does double pruning differ from other pruning techniques?

- Are there any downsides to double pruning according to Sobolev?

- Can double pruning according to Sobolev be applied to any neural network?

- Video: 328 – Holistic Filter Pruning for Efficient Deep Neural Networks

In recent years, neural networks have become a dominant technology in various fields, including computer vision, natural language processing, and speech recognition. However, the increasing complexity of neural networks has raised concerns regarding their efficiency. As networks grow larger and more sophisticated, they require significant computational resources, making them less feasible for deployment on resources-constrained devices and real-time applications.

To address this issue, researchers have proposed various techniques to improve the efficiency of neural networks. One such approach is pruning, which involves eliminating unnecessary connections and parameters in the network. Pruning can reduce the size of the network and the computational requirements while maintaining or even improving its performance.

In this article, we explore the concept of double pruning according to Sobolev, a novel technique that further enhances the efficiency of neural networks. Sobolev-inspired double pruning involves two stages of pruning: global and local. The global pruning stage involves removing connections and parameters that are deemed unimportant for the overall network performance. This initial pruning step significantly reduces the size of the network.

The local pruning stage, inspired by Sobolev spaces, focuses on removing connections and parameters that are less important for specific regions or areas of the network. By targeting these local regions, the network is further optimized and made more efficient. This double pruning approach achieves a high compression rate while minimizing the impact on the network’s performance.

Double pruning according to Sobolev offers a promising solution for improving the efficiency of neural networks, making them more suitable for deployment on resource-limited devices and real-time applications. By selectively removing unimportant connections and parameters at both the global and local levels, this technique reduces computational requirements without compromising performance, ultimately enabling faster and more efficient neural network implementations.

Understanding double pruning

Double pruning is a technique used to enhance the efficiency of neural networks by reducing their size while maintaining or even improving their performance. This approach involves performing two rounds of pruning: weight pruning and neuron pruning.

Weight pruning

Weight pruning involves identifying and removing the least important weights in a neural network. The importance of a weight is typically determined by its magnitude or its contribution to the overall loss function. By removing these less important weights, the network becomes sparser, resulting in a reduced number of parameters to be stored and processed.

The process of weight pruning can be done in several ways. One common method is to set a threshold, below which all weights are considered unimportant and are pruned. Another approach is to use a percentage-based pruning, where a certain percentage of the weights with the smallest magnitudes are pruned. The choice of pruning method depends on the specific requirements of the network and the task it is designed to solve.

Neuron pruning

Neuron pruning, also known as unit pruning, involves identifying and removing entire neurons from the neural network. Neurons that are considered unimportant are pruned, leading to further reduction in the size of the network. The importance of a neuron can be determined by various criteria, such as its activation value, its impact on the overall network output, or its contribution to the loss function.

Similar to weight pruning, neuron pruning can also be performed using different approaches. A threshold-based pruning can be used, where neurons with activation values below a certain threshold are pruned. Another method is to perform a percentage-based pruning, where a certain percentage of the neurons with the lowest activation values are pruned.

Benefits of double pruning

Double pruning offers several benefits for neural networks. Firstly, it reduces the storage and computational requirements of the network, making it more efficient to train and deploy. Additionally, by removing unnecessary weights and neurons, double pruning can improve the interpretability of the network, as it becomes clearer which features are important for the network’s predictions. Finally, double pruning can also help to mitigate the risk of overfitting, as the network becomes more regularized by the pruning process.

In conclusion, double pruning is a valuable technique for enhancing the efficiency of neural networks. By performing both weight pruning and neuron pruning, the network size can be significantly reduced without sacrificing performance. This technique is not only beneficial for resource-constrained environments but also offers advantages in terms of interpretability and regularized training.

The Importance of Neural Network Efficiency

Neural networks have revolutionized many areas of technology, including image recognition, natural language processing, and machine translation. However, as neural networks become deeper and more complex, their computational demands increase significantly.

Efficiency is a crucial factor to consider when designing and deploying neural networks. Inefficient models can be computationally expensive, requiring more time and computational resources to train and evaluate. This not only leads to higher costs but also limits their practical utility in real-time applications.

1. Faster Training and Inference

Efficient neural networks enable faster training and inference times, which is important for many applications, especially in areas where real-time decision making is crucial. Industries such as autonomous vehicles, robotics, and healthcare require models that can process data quickly and make predictions in real-time.

By improving the efficiency of neural networks, we can reduce the time required for training and evaluation, allowing for faster model iteration and deployment. This enables researchers and practitioners to experiment with larger datasets and more complex models, pushing the boundaries of what is possible in AI.

2. Lower Resource Requirements

Efficient neural networks also have lower resource requirements, making them more accessible and cost-effective. Reduced computational demands allow for training and running models on less powerful hardware, which reduces infrastructure costs and energy consumption.

This is particularly important in resource-constrained environments, such as mobile devices or edge computing systems, where limited power and computational capabilities are available. By optimizing neural networks for efficiency, we can enable AI applications on a wider range of devices, making them more accessible and usable for various industries and end-users.

3. Sustainable AI Development

Enhancing neural network efficiency contributes to sustainable AI development. As AI continues to play an increasingly significant role in various industries, it is crucial to address the environmental impact of AI systems.

Efficient neural networks reduce energy consumption during training and inference, leading to lower carbon footprints. By developing and deploying energy-efficient models, we can mitigate the environmental impact of AI and promote sustainable advancements in technology.

Conclusion

Efficiency is a key aspect of neural network design and deployment. By improving the efficiency of neural networks, we can achieve faster training and inference times, lower resource requirements, and contribute to sustainable AI development. These improvements pave the way for more practical and accessible AI applications, enabling their adoption across various industries and benefiting end-users.

Advantages of Sobolev’s approach

Sobolev’s approach to double pruning in neural networks offers several advantages over traditional pruning methods:

- Improved model efficiency: By utilizing Sobolev’s theory, the pruning process can be optimized to effectively eliminate redundant connections and parameters in the neural network. This leads to a more efficient model that requires fewer computational resources and memory.

- Enhanced generalization: Sobolev’s approach focuses on finding the optimal regularizer to minimize both the training error and the complexity of the network. This leads to improved generalization performance, allowing the model to perform well on unseen data.

- Less overfitting: Pruning based on Sobolev’s theory helps reduce overfitting by eliminating unnecessary parameters that are prone to memorizing the training data. This improves the model’s ability to generalize and make accurate predictions on new data.

- Easier interpretability: The pruning process according to Sobolev’s approach results in a sparser network architecture, making it easier to understand and interpret the learned representations and decision-making processes of the model.

- Less training time: The double pruning technique proposed by Sobolev introduces an iterative approach that combines magnitude pruning and resource-aware pruning. This optimized procedure allows for faster convergence during training, reducing the overall training time required.

Overall, Sobolev’s approach to double pruning offers significant advantages in terms of model efficiency, generalization performance, overfitting reduction, interpretability, and training time savings. By integrating Sobolev’s theory into the pruning process, neural networks can be optimized to achieve better performance with fewer resources.

Incorporating Sobolev’s method into pruning

The concept of Sobolev pruning is based on the idea of iteratively removing the least significant weights in a neural network to improve its efficiency and reduce computational resources required for its operation. The method involves two main steps: initial pruning and double pruning.

Initial pruning

- During the initial pruning step, the neural network is trained on a small subset of the training data, and the weights with the smallest absolute values are pruned. This results in a sparser network with a reduced number of weights.

- The initial pruning step helps to eliminate redundant or less significant weights, reducing the computational cost of the network without significantly affecting its performance.

Double pruning

- After the initial pruning, the neural network is trained again on the full training dataset. This step is called the “double pruning” as it further prunes the weights based on their sensitivity to changes in the loss function.

- Sobolev’s method takes advantage of the Sobolev norm, a mathematical concept that measures the smoothness of functions, to determine the sensitivity of the weights.

- The weights that are most sensitive to changes in the loss function are pruned, as they are considered less important for the network’s overall performance.

- By iteratively applying the double pruning step, Sobolev pruning gradually removes the least significant weights and improves the efficiency of the neural network.

The incorporation of Sobolev’s method into pruning allows for a more precise identification of the least significant weights in a neural network and leads to further improvements in its efficiency.

Improved performance with double pruning

Double pruning is a technique that enhances the efficiency of neural networks by performing two rounds of pruning: weight pruning and unit pruning. This approach aims to reduce the computational and memory requirements of the network while maintaining or even improving its performance.

In weight pruning, the goal is to identify and remove the least important connections in the network. This is done by setting the weights with low magnitudes to zero. Weight pruning helps to reduce the number of parameters in the network, resulting in faster inference and lower memory consumption.

After weight pruning, unit pruning is performed to remove entire neurons that have become redundant or less important. This pruning technique helps to further reduce the network size and improve its efficiency. Unit pruning can be based on various criteria, such as the magnitude of the weights connected to a neuron or the importance of the neuron in the overall network performance.

When double pruning is applied, both weight pruning and unit pruning are performed in succession, resulting in a more effective reduction in the network size. By combining these two pruning techniques, the network can achieve better efficiency without sacrificing its performance.

The improved performance of double pruning can be attributed to several factors. First, weight pruning helps to reduce the computational requirements of the network by removing unnecessary connections. This allows for faster inference and lower memory consumption. Second, unit pruning eliminates redundant neurons, further reducing the network size and improving its efficiency. Finally, the combination of weight pruning and unit pruning allows for a more comprehensive reduction in the network, resulting in better performance.

Overall, double pruning is an effective technique for enhancing the efficiency of neural networks. By applying both weight pruning and unit pruning, the network can achieve better performance while reducing its computational and memory requirements.

Implementation and techniques

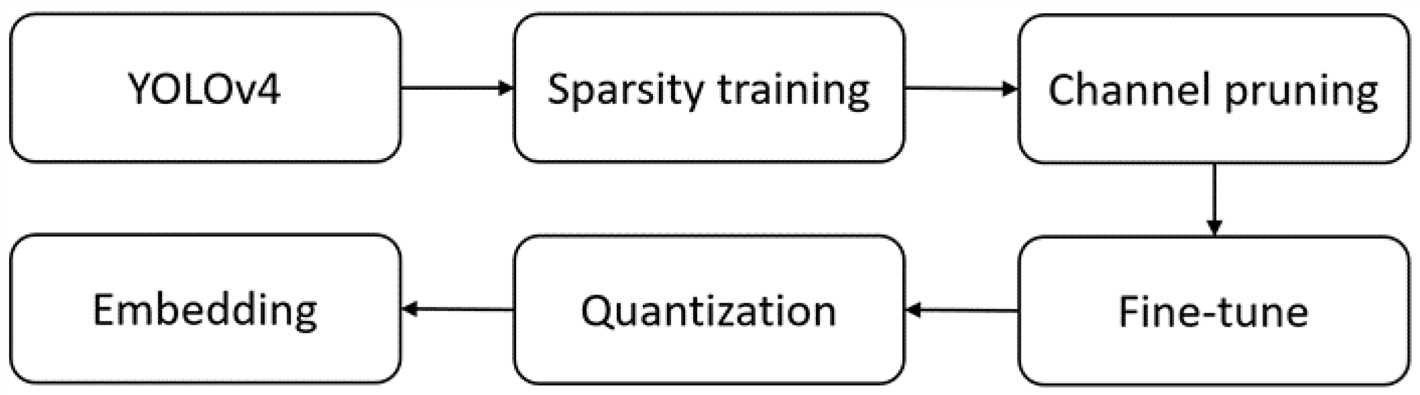

This section describes the implementation details and techniques used in the double pruning according to Sobolev approach for enhancing neural network efficiency.

1. Network architecture

The neural network architecture used in this approach follows a typical convolutional neural network (CNN) architecture. It consists of multiple layers, including convolutional layers, pooling layers, and fully connected layers. The specific architecture may vary depending on the target task and dataset.

2. Double pruning technique

The double pruning technique is a two-step process aimed at reducing the computational cost of neural networks while preserving their accuracy. It involves two stages: magnitude pruning and importance pruning.

2.1 Magnitude pruning

In magnitude pruning, the weights of the convolutional and fully connected layers are pruned based on their magnitudes. Initially, a threshold is set, and all weights below this threshold are pruned. This results in a sparse network where some weights are set to zero. The remaining non-zero weights are then fine-tuned to regain accuracy.

2.2 Importance pruning

In the importance pruning stage, the importance scores of the remaining non-zero weights are calculated. These scores can be based on various criteria, such as the gradients, the Hessian, or the influence functions of the weights. The top-k weights with the lowest importance scores are pruned, resulting in a further sparser network.

3. Training and fine-tuning

After the double pruning process, the pruned network needs to be trained and fine-tuned to restore its accuracy. This is done by using techniques like weight reinitialization, learning rate adjustment, and additional training epochs. The network is trained on the pruned dataset, which can be obtained by removing the pruned weights from the original dataset.

4. Evaluation and comparison

The performance of double pruning according to Sobolev is evaluated by comparing the pruned network’s accuracy and computational efficiency with the original unpruned network. This includes metrics such as accuracy, memory usage, floating-point operations, and inference time. The comparison is typically done on standard benchmarks or specific datasets relevant to the target task.

Steps to implement Sobolev’s double pruning

Preprocessing:

- Prepare the dataset: Start by preparing the dataset on which you want to train your neural network. Ensure that the dataset is clean and properly labeled. You may also need to split your dataset into training and testing sets.

- Select a base neural network architecture: Choose a base neural network architecture that suits your problem. This architecture will serve as the starting point for double pruning.

Training the base neural network:

- Initialize and train the base neural network: Initialize the base neural network with random weights and biases. Train the network using the training set of your dataset. Monitor its performance on the validation set and choose a suitable stopping criterion.

- Calculate the Sobolev scores: Once the base neural network is trained, calculate the Sobolev scores for each neuron in the network using the validation set. The Sobolev score measures the contribution of each neuron to the overall network performance.

Pruning and retraining:

- Prune low-scoring neurons: Set a threshold for the Sobolev scores and prune the neurons with scores below this threshold. These low-scoring neurons are considered less important to the network’s performance.

- Retrain the pruned network: Retrain the pruned network using the training set, keeping the remaining high-scoring neurons fixed. This step will help the network compensate for the removed neurons and adapt to the changes.

- Repeat the process: Repeat steps 2 and 3 until no further improvement in network performance is observed on the validation set. This iterative process allows for multiple rounds of pruning and retraining, maximizing the network’s efficiency.

Evaluating and testing:

- Evaluate the final pruned network: Evaluate the final pruned network using the testing set of your dataset. Measure its performance on various metrics, such as accuracy or loss, to ensure that pruning did not significantly degrade its performance.

- Compare with the base network: Compare the performance of the final pruned network with the base network to assess the effectiveness of Sobolev’s double pruning technique. Take note of any improvements in efficiency, such as reduced computational costs or improved inference speed.

Optimizing neural network efficiency through double pruning

Neural networks play a crucial role in various machine learning tasks, but their training and deployment can often be computationally expensive and memory-intensive. To overcome these limitations and enhance the efficiency of neural networks, researchers have developed various pruning techniques.

What is pruning?

Pruning is a technique used to reduce the size of a neural network by removing unnecessary connections or neurons. By eliminating redundant parameters, pruning helps improve the efficiency of training and inference, leading to faster and more compact models.

The benefits of double pruning

Double pruning, also known as iterative or two-step pruning, is a technique that involves pruning a neural network multiple times. It consists of two main steps:

- Initial pruning: In this step, a baseline model is pruned to remove the least important connections or neurons based on a certain criterion, such as weight magnitude or activation value.

- Recovery training and re-pruning: After the initial pruning, the pruned model is retrained on the training data to recover lost accuracy. This retrained model is then pruned again, but with a different criterion or threshold, to further eliminate unnecessary parameters.

The advantage of double pruning is that it allows for a more fine-grained elimination of redundant parameters, resulting in even more efficient and compact models. By iteratively pruning and retraining, double pruning can achieve higher compression rates without sacrificing performance.

Enhancing efficiency through Sobolev criterion

In the context of double pruning, the Sobolev criterion has been proposed as an enhancement to the pruning process. The Sobolev criterion considers the gradients of the loss function with respect to the weights of the neural network. By taking into account the gradient information, the Sobolev criterion can selectively prune connections or neurons that have lower impact on the overall loss.

Integrating the Sobolev criterion into the double pruning framework allows for a more informed and precise pruning process. By prioritizing the removal of connections or neurons with low gradient magnitude, the efficiency of the neural network can be further enhanced, while maintaining or even improving its performance.

Conclusion

Optimizing neural network efficiency is a crucial aspect of machine learning, particularly in scenarios with limited computational resources or strict latency requirements. Double pruning, combined with the Sobolev criterion, offers a powerful technique to achieve higher compression rates and faster inference without sacrificing performance. By iteratively pruning and retraining, neural networks can be made more efficient and compact, enabling their deployment in resource-constrained environments.

Enhancing neural network efficiency using double pruning according to Sobolev’s approach

Introduction

Neural networks have become an essential tool in various applications, ranging from computer vision to natural language processing. However, the increasing complexity of neural network architectures has led to a significant increase in their computational requirements and memory usage.

The need for network pruning

To address the efficiency concerns associated with neural networks, network pruning techniques have been developed. Pruning involves removing unnecessary connections and neurons from the network, reducing its complexity and memory footprint. This allows for faster training and inference times, and also enables the deployment of neural networks on resource-constrained devices.

The double pruning approach

Double pruning is an enhanced pruning technique proposed by Sobolev, which builds upon the traditional single pruning technique. In double pruning, the network is pruned twice, resulting in a more efficient and compact model.

First pruning stage

In the first stage of double pruning, the traditional single pruning technique is applied. This involves identifying unimportant connections based on their weights or gradients and removing them from the network. This results in a pruned network with reduced complexity and memory usage.

Recovery and retraining for the first pruned network

After the first pruning stage, the pruned network is recovered by adding back the previously pruned connections. The recovered network is then retrained to restore its performance. This step is crucial to ensure that the pruned network can still achieve high accuracy.

Second pruning stage

In the second stage of double pruning, another round of pruning is applied to the retrained network. This time, the pruning criteria are adjusted based on the Sobolev approach, which takes into account the network’s local geometry and curvature properties. By considering these additional factors, the second pruning stage further reduces the network’s complexity and memory usage.

Performance evaluation

To evaluate the effectiveness of double pruning according to Sobolev’s approach, a series of experiments were conducted. These experiments compared the performance of the double pruned network with the single pruned network and the original unpruned network. The results demonstrated that the double pruned network achieved similar or better performance while having a smaller memory footprint and faster inference times.

Conclusion

The double pruning approach according to Sobolev provides an effective method for enhancing the efficiency of neural networks. By combining traditional pruning techniques with Sobolev-based criteria, the double pruned networks achieve significant reductions in complexity and memory usage without sacrificing performance. This makes them suitable for deployment on resource-constrained devices and accelerates the training and inference processes.

Question-answer:

What is double pruning according to Sobolev?

Double pruning according to Sobolev is a technique for enhancing the efficiency of neural networks. It involves two stages of pruning: weight pruning and neuron pruning. Weight pruning removes unnecessary connections between neurons by setting their weights to zero, while neuron pruning removes entire neurons that are deemed unnecessary for the network’s functionality.

How does double pruning according to Sobolev enhance neural network efficiency?

Double pruning according to Sobolev enhances neural network efficiency by reducing the computational requirements of the network while maintaining or even improving its performance. By removing unnecessary connections and neurons, the network becomes more streamlined and can perform computations more quickly. This can also reduce memory usage and make the network more suitable for deployment on resource-constrained devices.

What are the benefits of double pruning according to Sobolev?

The benefits of double pruning according to Sobolev are improved neural network efficiency, reduced computational requirements, and potentially better performance. By removing unnecessary components, the network becomes smaller and more efficient, making it easier and faster to train and deploy. It can also lead to better generalization and reduce the risk of overfitting.

How does double pruning differ from other pruning techniques?

Double pruning differs from other pruning techniques in its two-stage approach. Most pruning techniques only focus on weight pruning, which removes unnecessary connections. However, double pruning according to Sobolev also includes neuron pruning, which removes entire neurons. This allows for more aggressive pruning and can further enhance the efficiency of the network.

Are there any downsides to double pruning according to Sobolev?

One potential downside of double pruning according to Sobolev is the risk of removing important connections or neurons. If the pruning criteria are too aggressive, the network may lose important information and its performance may suffer. Careful consideration and experimentation with different pruning thresholds are necessary to achieve the desired balance between efficiency and performance.

Can double pruning according to Sobolev be applied to any neural network?

Double pruning according to Sobolev can be applied to a variety of neural networks, including feedforward networks, convolutional neural networks (CNNs), and recurrent neural networks (RNNs). The technique is flexible and can be adapted to different network architectures and tasks. However, the specific implementation may vary depending on the network structure and the goals of pruning.